[Editor’s note: I am currently at CSICon. Consequently, even though I’ve written about this study elsewhere before, I think it’s good to revise, update, and repurpose the saga for SBM, given the way it shows how antivaccine “researchers” work. Also, I wanted to have a reference to this in SBM.]

For antivaxers, aluminum is the new mercury.

Let me explain, for the benefit of those not familiar with the antivaccine movement. For antivaxers, it is, first and foremost, always about the vaccines. Always. Whatever the chronic health issue in children, vaccines must have done it. Autism? It’s the vaccines. Sudden infant death syndrome? Vaccines, of course. Autoimmune diseases? Obviously it must be the vaccines causing it. Obesity, diabetes, ADHD? Come on, you know the answer!

Because antivaxers will never let go of their obsession with vaccines as The One True Cause Of All Childhood Health Problems, the explanation for how vaccines supposedly cause all this harm are ever-morphing in response to disconfirming evidence. Here’s an example. Back in the late 1990s and early 2000s, antivaxers in the US (as opposed to in the UK, where the MMR vaccine was the bogeyman) focused on mercury in vaccines as the cause of autism. That’s because many childhood vaccines contained thimerosal, a preservative that contains mercury. In an overly cautious bit of worshiping at the altar of the precautionary principle, in 1999 the CDC recommended removing the thimerosal from childhood vaccines, and as a result it was removed from most vaccines by the end of 2001. (Some flu vaccines continued to contain thimerosal for years after that, but no other childhood vaccine did, and these days it’s uncommon to find thimerosal-containing vaccines of any kind.)

More importantly, the removal of thimerosal from childhood vaccines provided a natural experiment to test the hypothesis that mercury causes or predisposes to autism. After all, if mercury in vaccines caused autism, the near-complete removal of that mercury from childhood vaccines in a short period of time should have resulted in a decline in autism prevalence beginning a few years after the removal. Guess what happened? Autism prevalence didn’t decline. It continued to rise. To scientists, this observation was a highly convincing falsification of the hypothesis through a convenient natural experiment, although those who belong to the strain of antivaccine movement sometimes referred to as the mercury militia still flog mercury as a cause of autism even now. Robert F. Kennedy, Jr. is perhaps the most famous mercury militia member, although of late he’s been sounding more and more like a run-of-the-mill antivaxer.

Which brings us to aluminum. Over the last few years, there have been a number of “studies” that remind me of the pseudoscience published by Mark and David Geier on mercury and autism back in the day, which have led to embarrassment of two researchers who have become the darlings of the antivaccine movement, whose previous studies and review articles have been discussed before. Yes, I’m referring to Christopher Shaw and Lucija Tomljenovic in the Department of Ophthalmology at the University of British Columbia. Both have a long history of publishing antivaccine “research,” mainly falsely blaming the aluminum adjuvants in vaccines for autism and, well, just about any health problem children have and blaming Gardasil for premature ovarian failure and all manner of woes up to and including death. Shaw was even prominently featured in the rabidly antivaccine movie The Greater Good. Not surprisingly, they’ve had a paper retracted, as well.

Actually, make that two. Their most recent study is to be retracted. I’ll get back to that in a moment. First, I think it’s worth looking at why the study is so bad, as well as why and how it was retracted. It’s a tale of either extreme carelessness, outright scientific fraud, or both.

Torturing mice in the name of pseudoscience

Before looking at the study itself, specifically the experiments included in it, let’s consider the hypothesis allegedly being tested by Shaw and Tomljenovic, because experiments in any study should be directed at falsifying the hypothesis. Unfortunately, there is no clear statement of hypothesis where it belongs, namely in the introduction. Instead, what we get is this:

Given that infants worldwide are regularly exposed to Al [aluminum] adjuvants through routine pediatric vaccinations, it seemed warranted to reassess the neurotoxicity of Al in order to determine whether Al may be considered as one of the potential environmental triggers involved in ASD.

In order to unveil the possible causal relationship between behavioral abnormalities associated with autism and Al exposure, we initially injected the Al adjuvant in multiple doses (mimicking the routine pediatric vaccine schedule) to neonatal CD-1 mice of both sexes.

This was basically a fishing expedition in which the only real hypothesis was that “aluminum in vaccines is bad and causes bad immune system things to happen in the brain.” “Fishing expeditions” in science are studies in which the hypothesis is not clear and the investigators are looking for some sort of effect that they suspect they will find. In fairness, fishing expeditions are not a bad thing in and of themselves—indeed, they are often a necessary first step in many areas of research—but they are hypothesis-generating, not hypothesis confirming. After all, there isn’t a clear hypothesis to test; otherwise it wouldn’t be a fishing expedition. The point is that this study does not confirm or refute any hypothesis, much less provide any sort of slam-dunk evidence that aluminum adjuvants cause autism.

Now, let’s look at the paper’s shortcomings before—shall we say?—issues were noted regarding several of the figures (to be discussed in the next section).

Moving along, I note that this was a mouse experiment, and, pre-retraction, antivaxers were selling Shaw’s paper as compelling evidence that vaccines cause autism through their aluminum adjuvants causing an inflammatory reaction in the brain. Now, seriously. Mouse models can be useful for a lot of things, but, viewed critically, autism is not really one of them for the most part. After all, autism is a human neurodevelopmental disorder diagnosed entirely by behavioral changes, and correlating mouse behavior with human behavior is very problematic. Indeed, correlating the behavior of any animal, even a primate, with human behavior is fraught with problems. Basically, there is no well-accepted single animal model of autism, and autism research has been littered with mouse models of autism that were found to be very much wanting. (“Rain mouse,” anyone?) Basically, despite the existence of many mouse strains touted to be relevant to autism, almost none of them are truly relevant.

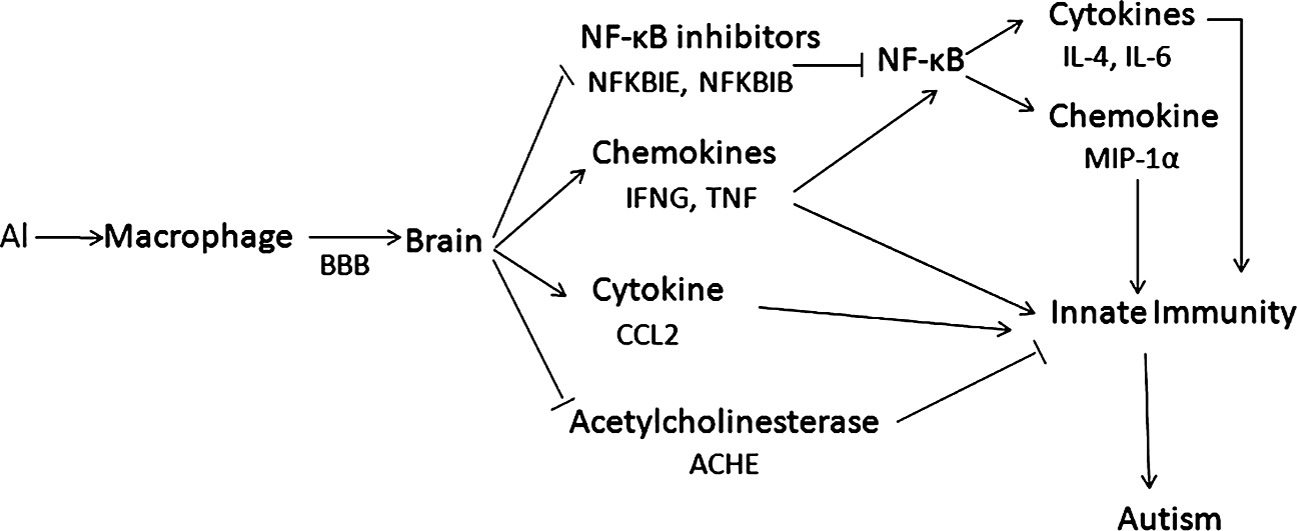

The assumption behind this study was that immune changes in the brain of mice will be relevant to immune activation in the brains of autistic humans. That is an assumption that hasn’t yet been confirmed with sufficient rigor to view this study’s results as any sort of compelling evidence that aluminum adjuvants cause autism—even if they do cause inflammatory changes in mouse brains. Yes, the authors included this important-looking diagram describing how they think immune system activation causes autism:

In the end, though, as impressive as it is, the relevance of this chart to autism is questionable at best, as is the relevance of this study.

So let’s look at the mouse strain chosen by the investigators, CD-1 mice. Basically, there’s nothing particularly “autistic” (even in terms of existing mouse models purported to be relevant to autism) about these mice, which are described in most catalogues of companies selling them as “general purpose.” Basically, the authors used them because they had used them before in previous studies in which they reported that aluminum injections caused motor neuron degeneration (nope, no autism) and another crappy paper in the same journal from 2013 purporting to link aluminum with adverse neurological outcomes. That’s it.

As for the experiment itself, neonatal mice were divided into two groups, a control group that received saline injections and the experimental group received injections of aluminum hydroxide in doses timed such that they that purportedly mimicked the pediatric vaccine schedule. Looking over the schedule used, I can’t help but note that there is a huge difference between human infant development and mouse development. Basically, the mice received aluminum doses claimed to be the same as what human babies get by weight six times in the first 17 days of life. By comparison, in human babies these doses are separated by months. In addition, in human babies, vaccines are injected intramuscularly (in a muscle). In this study, the mice were injected subcutaneously (under the skin). This difference immediately calls into question the applicability of this model to humans, even if you accept the mouse model as valid. The authors stated that they did it because they wanted to follow previously utilized protocols in their laboratory. In some cases, that can be a reasonable rationale for an experimental choice, but in this case the original choice was questionable in the first place. Blindly sticking with the same bad choice is just dumb.

So what were the endpoints examined in the mice injected with aluminum hydroxide compared to saline controls? After 16 weeks, the mice were euthanized and their brains harvested to measure gene expression and the levels of the proteins of interest. Five males and five females from each group were “randomly paired” for “gene expression profiling.” Now, when I think of gene expression profiling, I usually think of either cDNA microarray experiments, in which the levels of thousands of genes are measured at the same time, or next generation sequencing, in which the level of every RNA transcript in the cell can be measured simultaneously. That doesn’t appear to be what the authors did. Instead, they used a technique known as PCR to measure the messenger RNA levels of a series of cytokines. Basically, they examined the amount of RNA coding for various immune proteins in the brain chosen by the authors as relevant to inflammation. The authors also did Western blots for many of those proteins, which is a test in which proteins are separated on a gel, blotted to a filter, and then probed with specific antibodies, resulting in bands that can be measured by a number of techniques, including autoradiography or chemiluminescence, both of which can be recorded on film on which the relevant bands can be visualized. Basically, what the authors did wasn’t really gene expression profiling. It was measuring a bunch of genes and proteins and hoping to find a difference.

There’s an even weirder thing. The authors didn’t use quantitative real time reverse transcriptase PCR, which has been the state-of-the-art for measuring RNA message levels for quite some time. Rather, they used a very old, very clunky form of PCR that can only produce—at best—semiquantitative results. (That’s why we used to call it semiquantitative PCR.) Quite frankly, in this day and age, there is absolutely zero excuse for choosing this method for quantifying gene transcripts. If I were a reviewer for this article, I would have recommended not publishing it based on this deficiency alone. Real time PCR machines, once very expensive and uncommon, are widely available. (Hell, I managed to afford very simple one in my lab nearly 15 years ago.) These days, any basic or translational science department worth its salt has at least one available to its researchers.

The reason that this semiquantitative technique is considered inadequate is that the amount of PCR product grows exponentially, roughly doubling with every cycle of PCR, asymptotically approaching a maximum as the primers are used up.

It usually takes around 30-35 cycles before everything saturates and the differences observed in the intensity of the DNA bands when they are separated on a gel become indistinguishable. That’s why PCR was traditionally and originally primarily considered a “yes/no” test. Either the RNA being measured was there and produced a PCR band, or it didn’t. In this case, the authors used 30 cycles, which is more than enough to result in saturation. (Usually semiquantitative PCR stops around 20-25 cycles or even less.) And I didn’t even (yet) mention how the authors didn’t use DNAse to eliminate the small amounts of DNA that contaminate nearly all RNA isolations. Basically, the primers used for PCR pick up DNA as well as any RNA, and DNA for the genes of interest will be guaranteed to contaminate the specimens without DNAse treatment. Yes, you molecular biologists out there, I know that’s simplistic, but my audience doesn’t consist of molecular biologists.

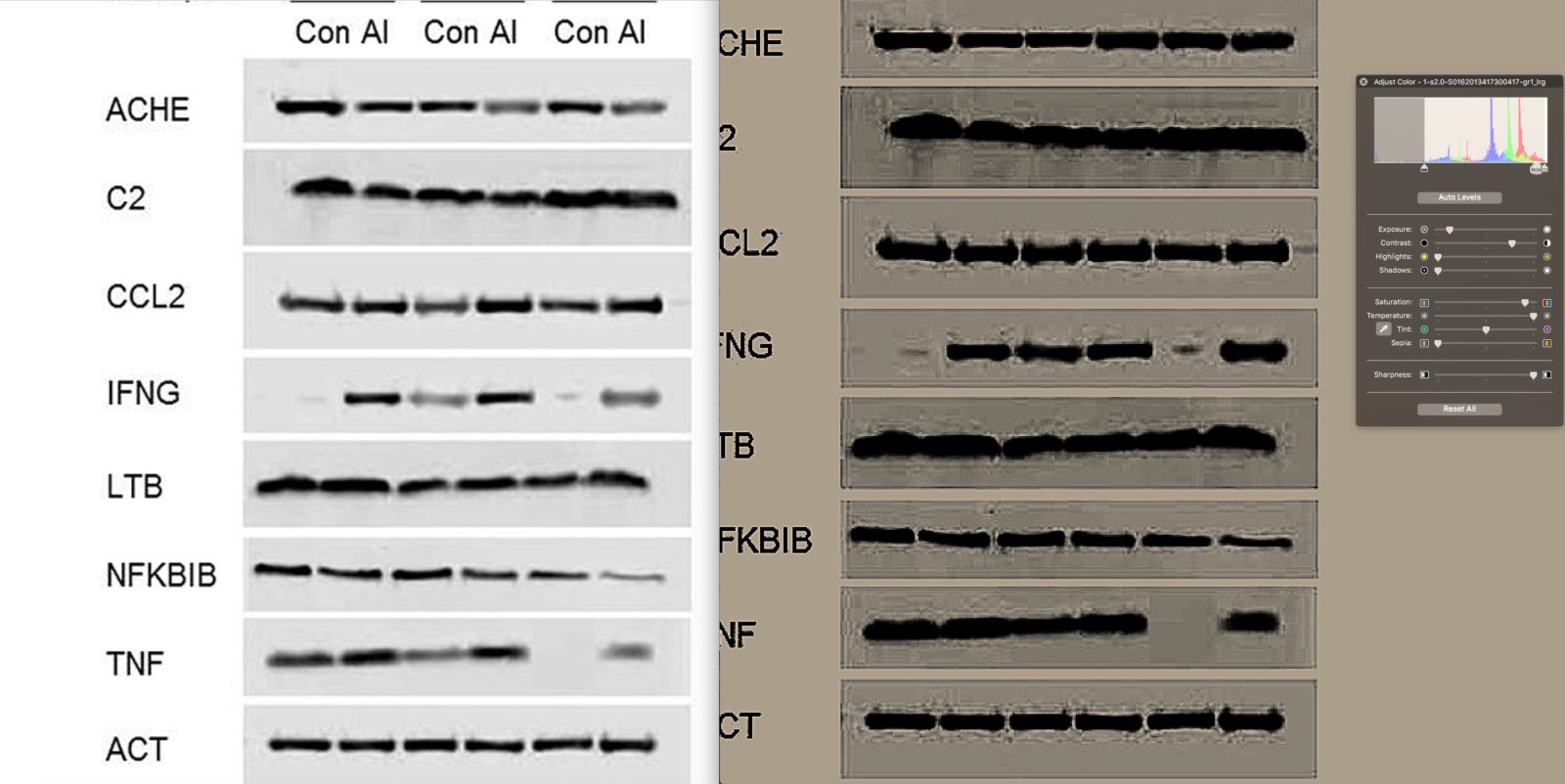

Now, take a look at Figures 1A and 1B as well as Figures 2A and 2B. (You can do it if you want. The article is open access and, for reasons unclear to me, has not yet been removed from the journal website.) Look at the raw bands in the A panels of the figures. Do you see much difference, except for IFNG (interferon gamma) in Figure 1A? I don’t. What I see are bands of roughly the same intensity, even the ones that are claimed to vary by three-fold. In other words, I basically am very skeptical that the investigators saw much of difference in gene expression between controls and the aluminum-treated mice. In fairness, for the most part, the protein levels as measured by Western blot did correlate with what was found on PCR, but there’s another odd thing. The investigators didn’t do Western blots for all the same proteins whose gene expression they measured by PCR. Of course, they present primers for 27 genes, but only show blots for 18 (17 inflammatory genes plus beta actin, which was used as a standard to normalize the values for the other 17 genes).

I also questioned the statistical tests chosen by the authors. Basically, they examined each gene separately and used Student’s t-test to assess statistical significance. However, in reality they did many comparisons, at least 17, and there’s no evidence that the authors controlled for multiple comparisons. If one chooses statistical significance to occur at p < 0.05 and compares 20 samples, by random chance alone at least one will be different. Add to that the fact that there is no mention of whether the people performing the assays were blinded to experimental group, and there’s a big problem. Basic science researchers often think that blinding isn’t necessary in their work, but there is a potential for unconscious bias that they all too often don’t appreciate. For example, the authors used Image J, free image processing software developed by the NIH. I’ve used Image J before. It’s a commonly used app used to quantify the density of bands on gels, even though it’s old software and hasn’t been updated in years. Basically, it involves manually drawing outlines of the bands, setting the background, and then letting the software calculate the density of the bands. The potential for bias shows up in how you draw the lines around the bands and set the backgrounds. As oblivious as they seem to be to this basic fact, basic scientists are just as prone to unconscious bias as the rest of us, and, absent blinding, in a study like this there is definitely the potential for unconscious bias to affect the results. In fairness, few basic science researchers bother to blind whoever is quantifying Western blots or ethidium bromide-stained DNA gels of PCR products, but that’s just a systemic problem in biomedical research that I not infrequently invoke when I review papers. Shaw and Tomljenovic are merely making the same mistake that at least 90% of basic scientists make.

But let’s step back and take the authors’ results at face value for a moment. Let’s assume that what is reported is a real effect. In the rest of the paper, the authors present evidence of changes in gene expression that suggest the activation of a molecular signaling pathway controlled by a molecule called NF-κB and that male mice were more susceptible to this effect than females. (Just like autism!) Funny, but I know NF-κB. I’ve published on NF-κB. I had an NIH R01 grant to study how my favorite protein affected NF-κB. True, I ended up abandoning that line of research because I hit some dead ends. True, I’m not as familiar with NF-κB as I used to be. But I do know enough to know that NF-κB is easy to activate and very nonspecific. I used to joke that just looking at my cells funny would activate NF-κB signaling. Also, NF-κB activation is indeed associated with inflammation, but so what? What we have is an artificial model in which the mice are dosed much more frequently with aluminum than human infants. Does this have any relevance to the human brain or to human autism? who knows? Probably not. No, almost certainly not.

Also, the mouse immune system is different from the human immune system. None of this stops the authors from concluding:

Based on the data we have obtained to date, we propose a tentative working hypothesis of a molecular cascade that may serve to explain a causal link between Al and the innate immune response in the brain. In this proposed scheme, Al may be carried by the macrophages via a Trojan horse mechanism similar to that described for the human immunodeficiency virus (HIV) and hepatitis C viruses, travelling across the blood-brain-barrier to invade the CNS. Once inside the CNS, Al activates various proinflammatory factors and inhibits NF-κB inhibitors, the latter leading to activation of the NF-κB signaling pathway and the release of additional immune factors. Alternatively, the activation of the brain’s immune system by Al may also occur without Al traversing the blood-brain barrier, via neuroimmuno-endocrine signaling. Either way, it appears evident that the innate immune response in the brain can be activated as a result of peripheral immune stimuli. The ultimate consequence of innate immune over-stimulation in the CNS is the disruption of normal neurodevelopmental pathways resulting in autistic behavior.

That’s what we call in the business conclusions not supported by the findings in a study. On a more “meta” level, it’s not even clear whether the markers of inflammation observed in autistic brains are causative or an epiphenomenon. As Skeptical Raptor noted, it could be that the inflammation reported is caused by whatever the primary changes in the brain are that result in autism. Cause and effect are nowhere near clear. One can’t help but note that many of the infections vaccinated against cause way more activation of the immune system and cytokines than vaccination.

So what are we left with?

Basically, what we have is yet another mouse study of autism. The study purports to show that aluminum adjuvants cause some sort of “neuroinflammation,” which, it is assumed, equals autism. By even the most charitable interpretation, the best that can be said for this study is that it might show increased levels of proteins associated with inflammation in the brains of mice who had been injected with aluminum adjuvant way more frequently than human babies ever would be. Whether this has anything to do with autism is highly questionable. At best, what we have here are researchers with little or no expertise in very basic molecular biology techniques using old methodology that isn’t very accurate overinterpreting the differences in gene and protein levels that they found. At worst, what we have are antivaccine “researchers” who are not out for scientific accuracy but who actually want to promote the idea that vaccines cause autism. (I know, I know, it’s hard not to ask: Why not both?) If this were a first offense, I’d give Shaw and Tomljenovic the benefit of the doubt, but this is far from their first offense. Basically, this study adds little or nothing to our understanding of autism or even the potential effects of aluminum adjuvants. It was, as so many studies before, the torture of mice in the name of antivax pseudoscience. The mice used in this study died in vain in a study supported by the profoundly antivaccine Dwoskin Foundation.

Enter fraud?

Not long after Shaw’s paper was published, serious questions were raised about the figures in the paper. To understand the problem, you need to know that after a PCR reaction is run, the PCR products (DNA fragments amplified by the PCR reaction) are separated by placing them in an agarose gel and running an electrical current through it. This gel electrophoresis works because DNA migrates towards the positive electrode and, once it solidifies, agarose forms a gel that separates the DNA fragments by size. The gel can then be stained with ethidium bromide, whose fluorescence allows visualization of the bands, which can be assessed for size and purity. Photos of the gel can be taken and subjected to densitometry to estimate how much DNA is in each band relative to the other bands.

To measure protein, Western blots work a little differently. Basically isolated cell extracts or protein mixtures are subjected to polyacrylamide gel electrophoresis (PAGE) with a denaturing agent (SDS). Again, like DNA, protein migrates towards the positive electrode, and the gel forms pores that impeded the process, allowing separation by size and charge. The proteins are then transferred to a membrane (the Western blot) and visualized by using primary antibodies to the desired protein, followed by a secondary antibody with some sort of label. In the old days, we often used radioactivity. These days, we mostly use chemiluminescence. Blots are then exposed to film or, more frequently today, to a phosphoimager plate, which provides a much larger linear range for detecting the chemiluminescence than old-fashioned film. Just like DNA gels, the bands can be quantified using densitometry. In both cases, it’s very important not to “burn” (overexpose) the film, which pushes the band intensity out of the linear ranger) or to underexpose them (noise can cause problems). It’s also important how the lines are drawn around the bands using the densitometry software and how the background is calculated. More modern software can do it fairly automatically, but there is almost always a need to tweak the outlines chosen, which is why I consider it important that whoever is doing the densitometry should be blinded to experimental group, as bias can be introduced in how the bands are traced.

So why did I go through all this? Hang on, I’ll get to it. First, however, I like to point out to our antivaccine “friends” that peer review doesn’t end when a paper is published. Moreover, social media and the web have made it easier than ever to see what other scientists think of published papers. In particular, there is a website called PubPeer, which represents itself as an “online journal club.” More importantly, for our purposes, PubPeer is a site where a lot of geeky scientists with sharp eyes for anomalies in published figures discuss papers and figures that seem, well, not entirely kosher. It turns out that some scientists with sharp eyes have been going over Shaw and Tomljenovic’s paper, and guess what? They’ve been finding stuff. In fact, they’ve been finding stuff that to me (and them) looks rather…suspicious.

One, for instance, took figure 1C of the paper and adjusted the background and contrast to accentuate differences in tones:

It was immediately noted:

- A clear and deliberate removal of the Male 3 Control TNF result. This isn’t an unacknowledged splice, as there is no background pattern from a gel contiguous with either band, left or right.

- Removal of the left half of the Male 1 Control IFN-g. Dubious also about Male 3 Control IFN-g, as the contrast highlight shows boxing around the band.

- What appears like an unacknowledged splice in ACHE blot, between AI Animal 2, Control Animal 3

Comparing this representative blot to the densitometry accompanying it, they score from 5 independent experiments IFN-g fold change from control to AI, relative to actin, as on average 4.5, with an SEM ranging from ~2.7 to 6.5. This seems too good to be true.

Look at the band. It’s the second from last band. It looks as though the band has been digitally removed. There is an obvious square there. The edges are clear. Now, this could be a JPEG compression artifact. Indeed, one of the commenters is very insistent about reminding everyone that compression artifacts can look like a square and fool the unwary into thinking that some sort of Photoshopping had occurred. However, I do agree with another of the PubPeer discussants this is enough of a problem that the journal should demand the original blot.

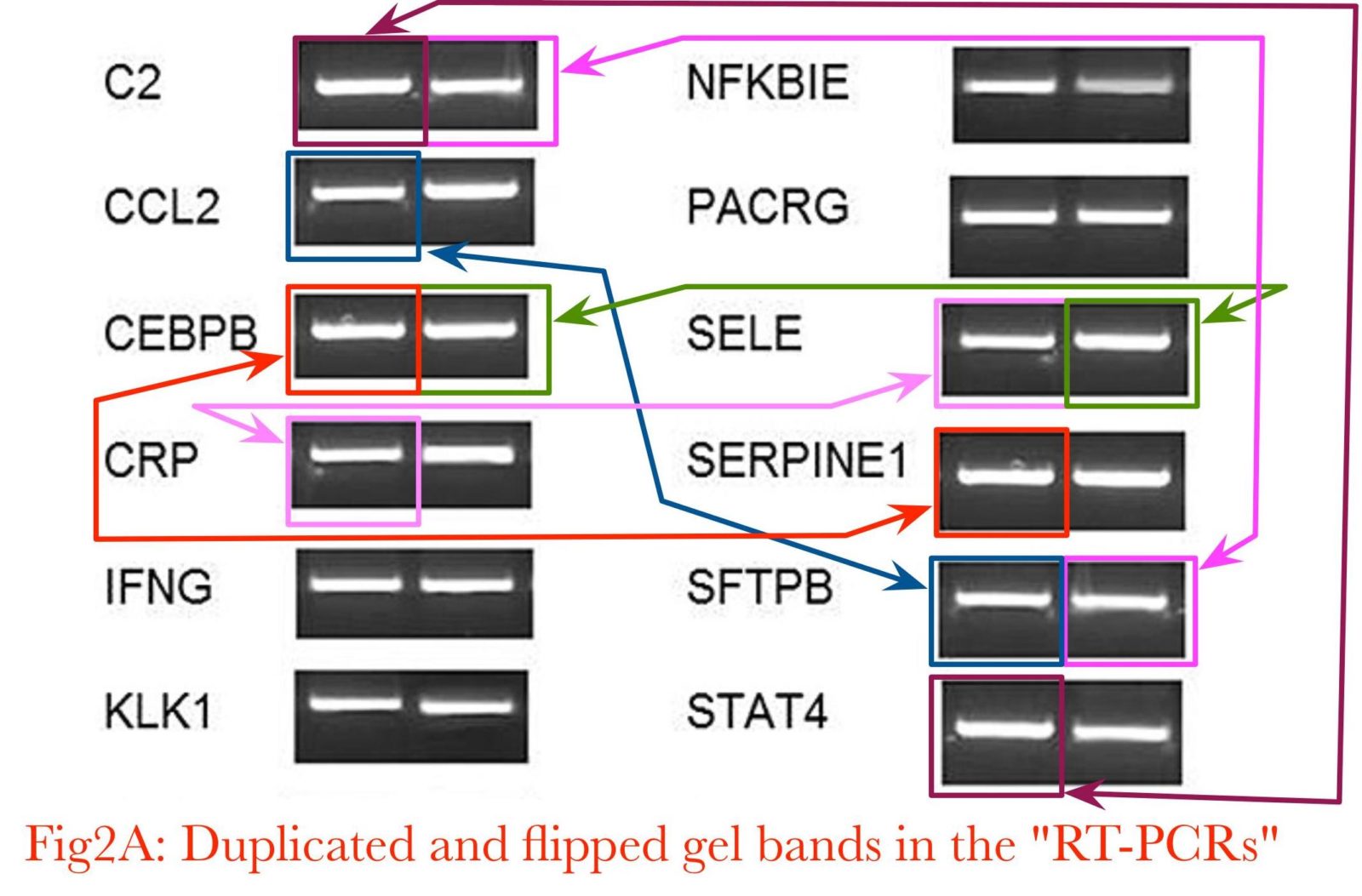

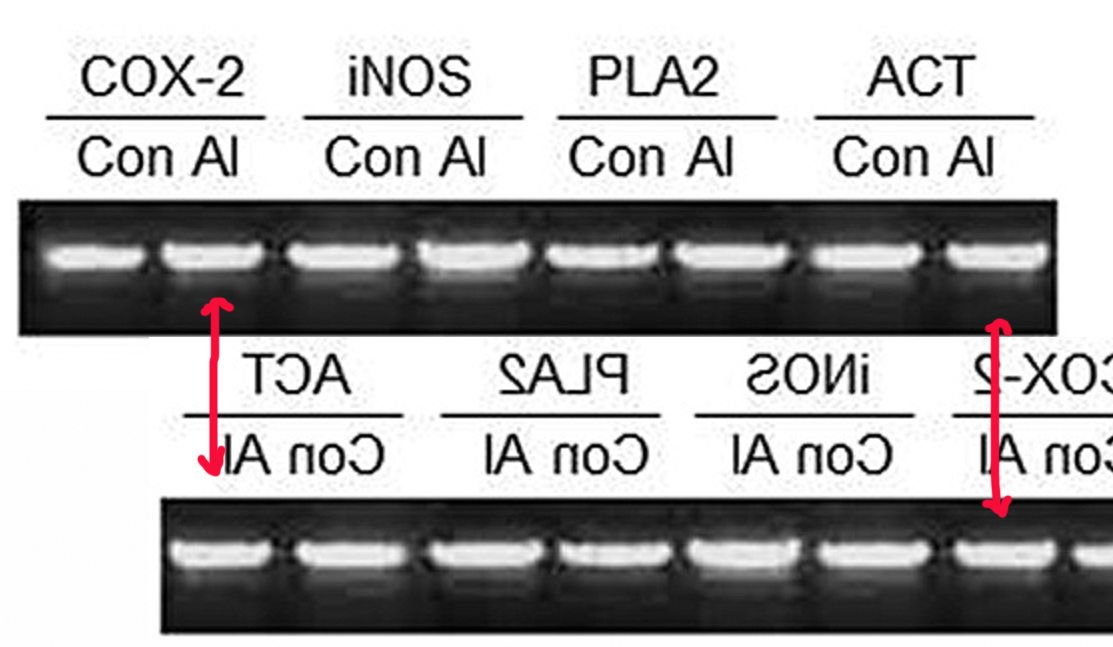

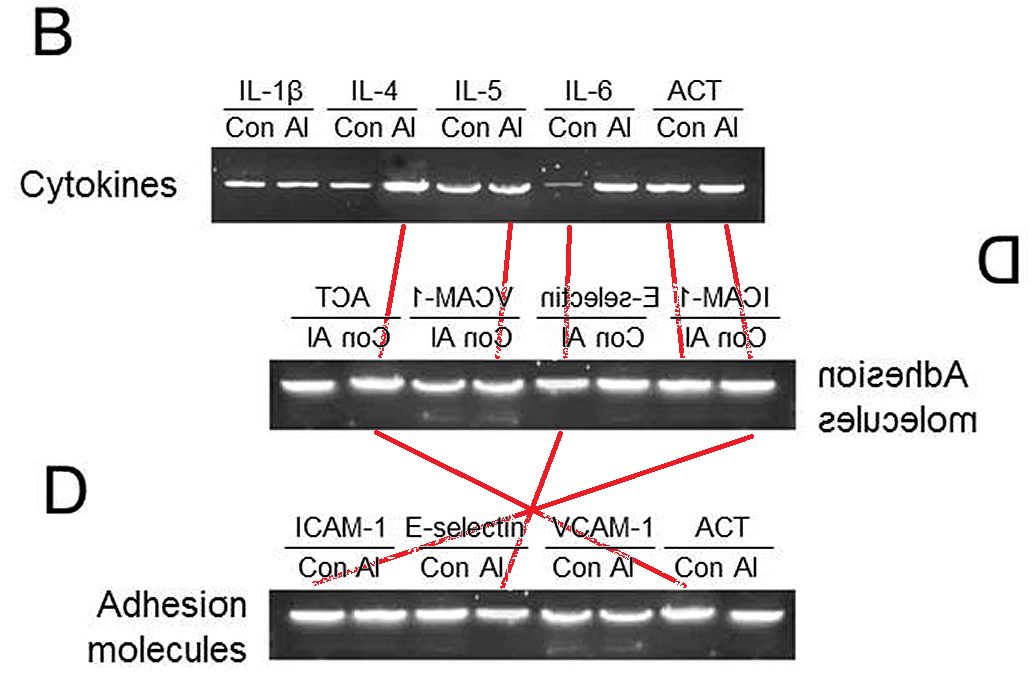

On this one, I’ll give Shaw and Tomljenovic the benefit of the doubt. (Whether they deserve it or not, you can judge for yourself.) That might be a compression artifact. Other problems discovered in the gels are not so easily dismissed. For instance, there definitely appears to be the ol’ duplicated and flipped gel bands trick going on in Figure 2A:

Spotting these takes a little bit of skill, but look for distinctive parts of bands and then look to see if they show up elsewhere. It’s also necessary to realize that there could be multiple different exposures of the same band, such that the same band can appear more or less intense and mirror-imaged. You have to know what to look for, and I fear that some readers not familiar with looking at blots like these might not see the suspicious similarities, even when pointed out. Still, let’s take a look. There are more examples, for instance, these two bands in Figure 4C:

And Figures 4B and 4D, where bubbles on the gels serve as markers:

You can look at the rest of the PubPeer images for yourself and decide if you agree that something fishy is going on here. I’ve seen enough that I think there is. Others noted that Shaw and Tomljenovic have engaged in a bit of self-plagiarism, too. Figure 1 in the 2017 paper is identical (and I do mean identical, except that the bars in the older paper are blue) to a paper they published in 2014. Basically, they threw a little primary data into one of their crappy review articles trying to blame “environment” (i.e., vaccines) for autism, this one published in 2014 in OA Autism. Don’t take my word for it. Both articles are open-access, and you can judge for yourself, at least as long as Shaw’s most recent paper remains in press.

And that’s still not all. Let’s take a visit to our scaly friend, Skeptical Raptor, where he notes that The Mad Virologist and the Blood-Brain Barrier Scientist jointly analyzed the paper and found:

But there are six other key points that limit what conclusions can be drawn from this paper:

- They selected genes based on old literature and ignored newer publications.

- The method for PCR quantification is imprecise and cannot be used as an absolute quantification of expression of the selected genes.

- They used inappropriate statistical tests that are more prone to giving significant results which is possibly why they were selected.

- Their dosing regime for the mice makes assumptions on the development of mice that are not correct.

- They gave the mice far more aluminum sooner than the vaccine schedule exposes children to.

- There are irregularities in both the semi-quantitative RT-PCR and Western blot data that strongly suggests that these images were fabricated. This is probably the most damning thing about the paper. If the data were manipulated and images fabricated, then the paper needs to be retracted and UBC needs to do an investigation into research misconduct by the Shaw lab.

Taken together, we cannot trust Shaw’s work here and if we were the people funding this work, we’d be incredibly ticked off because they just threw away money that could have done some good but was instead wasted frivolously. Maybe there’s a benign explanation for the irregularities that we’ve observed, but until these concerns are addressed this paper cannot be trusted.

I note that they go into even more detail about the problems with the images that have led me (and others) to be suspicious of image manipulation, concluding:

These are some serious concerns that raise the credibility of this study and can only be addressed by providing a full-resolution (300 dpi) of the original blots (X-ray films or the original picture file generated by the gel acquisition camera).

There has been a lot of chatter on PubPeer discussing this paper and many duplicated bands and other irregularities have been identified by the users there. If anyone is unsure of how accurate the results are, we strongly suggest looking at what has been identified on PubPeer as it suggests that the results are not entirely accurate and until the original gels and Western blots have been provided, it looks like the results were manufactured in Photoshop.

I agree. Oh, and I agree with their criticism of the use of statistics. I even brought up their failure to control for multiple comparisons, but Shaw and Tomljenovic also used a test that is appropriate for a normal distribution when their data obviously did not follow a normal distribution.

The retraction

Not surprisingly, the journal that published this turd of a paper, The Journal of Inorganic Biochemistry, received a lot of commentary and complaints, all of which clearly had an effect. Basically, as I mentioned above, the editor of the journal decided to retract the paper. Not surprisingly, Shaw is making excuses:

Shaw, a professor at UBC’s department of ophthalmology, said he and the lab ran their own analysis of the figures in question after seeing allegations from PubPeer on Sept. 24. He said he requested a retraction from the journal within two days and notified the university.

“It appears as if some of the images in mostly what were non-significant results had been flipped,” Shaw told CBC on Thursday. “We don’t know why, we don’t know how … but there was a screw-up, there’s no question about that.”

Shaw said the lab can’t confirm how the figures were allegedly altered because he claims original data needed for comparison is no longer at the UBC laboratory.

“We don’t think that the conclusions are at risk here, but because we don’t know, we thought it best to withdraw,” the researcher said.

Asked how the seemingly wonky figures weren’t caught before publication, Shaw said it was “a good question.”

Indeed it is. Too bad Shaw doesn’t have a good answer to that good question. Actually, in fairness, he does have part of point here:

“We were always under the impression that, based on our viewing of the original data a couple years ago and our subsequent analysis of these data, that everything was fine,” he said. “One double-checks this at various stages in the process, but by the time you’ve looked at them enough times and done the various analyses on them, you do tend to believe they’re right.

“When you look at these kinds of [data], unless you look at them under very, very high power and magnify them 20 times — which no one does, by the way — you would not necessarily see that there was anything untoward,” the professor said.

No, it was not necessary to look at the figures under high power to catch the image manipulation. Certainly, the PubPeer denizens who found the image manipulation needed such tools to see the problems with the various images, just trained eyes. On the other hand, as a researcher myself, I can understand how, looking at the same figures over and over again, one tends to miss things about them. It’s not a valid excuse however. Neither is this:

Shaw claims the original data is in China, with an analyst who worked on the paper.

The professor claimed the analyst told him the data are “stuck there.”

“It’s like ‘the dog ate my homework.’ What are you going to do?”

He noted that, even if the original data are recovered, he thinks “this paper is dead” for credibility reasons.

This is, of course, appalling. For example, the NIH requires that the raw data be kept by the principal investigator for ten years. True, Shaw’s study was not funded by the NIH, but rather by a the antivaccine Dwoskin Foundation, but his university does require investigators to keep the raw data with them for at least five years, which means that the data should have been kept at least until 2018.

Amusingly:

Reached by email on Friday, co-author Tomljenovic said she agreed to the retraction but said she “had nothing to do either with collecting or analyzing any of the actual data.” She declined further comment.

Then why was she a co-author? Maybe she helped write some of the antivaccine boilerplate for the introduction. Meanwhile, Dan Li has apparently lawyered up. I wonder why. Naturally, Shaw is throwing the “pharma shill” moniker around hither, thither, and yon:

In response to the criticisms from scientists online, Shaw was dismissive.

“Anti-vaccine” researcher is an ad hominem term tossed around rather loosely at anyone who questions any aspect of vaccine safety. It comes often from blogs and trolls, some of which/whom are thinly disguised platforms for the pharmaceutical industry… Anyone who questions vaccine safety to whatever degree gets this epithet.

My view: I see vaccines as one of many useful medical interventions. Prophylactic medicine in all of its forms is great, and vaccination is a way to address infectious diseases with the goal of preventing them. But, much like other medical interventions, vaccines are not completely safe for all people, nor under all circumstances.

In follow-up questions, Shaw said that if future data does not support a link between autism and aluminum, he would reconsider his hypothesis and research.

Sure he will. Sure he will. Of course, I was not surprised at his invocation of the pharma shill gambit. It’s what antivaxers do when criticized. Also, there’s a difference between “questioning vaccine safety” and doing so based on pseudoscience and, indeed, contributing to that pseudoscience by carrying out bogus studies of one’s own designed to demonstrate that, for example, Gardasil causes death and premature ovarian failure and aluminum adjuvants cause autism, as Shaw and Tomljenovic have done extensively. That’s what makes them antivax, not “questioning” vaccine safety.

Shaw did say one thing that (I hope) turns out to be true:

Shaw said he’s likely finished working on papers concerning vaccines after this retraction.

“I’m honestly not sure at this point that I want to dabble in [vaccines] anymore,” he said. “We have some projects that are ongoing that have been funded that we feel duty-bound to complete that are on this topic. Frankly, I doubt if I will do it again after that.”

We can only hope, but I’d be willing to bet that Dwoskin Foundation money will continue to support Shaw; that is, unless the foundation decides that even Shaw is too discredited for its purposes and finds another pliable scientist to do its dirty work of demonizing vaccines.

Whatever happens, this incident does help to illustrate that science can work. Peer review might have failed, allowing this paper to be published, but post-publication peer review did work, leading to its retraction.